https://www.ndepend.com version 2017.3.1 Professional Edition

NDepend is a static code analysis tool to improve code quality, visualize dependencies and reduce technical debt.

What’s included

What you get is a zip file with the contents of the NDepend application suite for which the contents can be dropped into a folder of your liking. Included in the package are the following files in the root folder:

- Console.exe – Command line version of the NDepend for use by Continuous Integration (CI) tools and build servers.

- PowerTools.exe – Interactive command line as an example of how the API can be used by developers, the source code is included in the NDepend.PowerTools.SourceCode subfolder

- VisualStudioExtension.Installer.exe – Installs the extension for Visual Studio

- VisualNDepend.exe – Standalone version of NDepend

There is an Integration subfolder with detailed information on how to configure NDepend with your favorite Continuous Integration (CI) servers. (You are using a CI server, right?) On the web site, there are extensions and configurations for many of the most popular CI servers including TeamCity, CruiseControl.NET, FinalBuilder and others. Visual Studio Team Services and TFS 2017 support is by an extension downloaded from the Visual Studio Marketplace. Upon a full analysis, a web page is created with details from the analysis which is great for inclusion into a CI system.

NDepend rules are written in a LINQ syntax, each rule is displayed when looking at the issues. The LINQ syntax also contains suggestions on how to correct the issue which I found helpful when the suggestion was not clear. The rules can also be disabled on a per-project basis.

Here’s and example of the LINQ syntax (note the description section):

// <Name>High issues (grouped per rules)</Name>

let issues = Issues.Where(i => i.Severity == Severity.High)

let rules = issues.ToLookup(i => i.Rule)

from grouping in rules

let r = grouping.Key

let ruleIssues = grouping.ToArray()

orderby ruleIssues.Length descending

let debt = ruleIssues.Sum(i => i.Debt)

let annualInterest = ruleIssues.Sum(i => i.AnnualInterest)

let breakingPoint = debt.BreakingPoint(annualInterest)

select new { r,

ruleIssues,

debt,

annualInterest,

breakingPoint,

Category = r.Category

}

//<Description>

// Issues with a High severity level should be fixed quickly, but can wait until the next scheduled interval.

//

// **Debt**: Estimated effort to fix the rule issues.

//

// **Annual Interest**: Estimated annual cost to leave the rule issues unfixed.

//

// **Breaking Point**: Estimated point in time from now, when leaving the

// rule issues unfixed cost as much as fixing the issues.

// A low value indicates easy-to-fix issues with high annual interest.

// This value can be used to prioritize issues fix, to significantly

// reduce interest with minimum effort.

//

// **Unfold** the cell in *ruleIssues* column to preview all its issues.

//

// **Double-click a rule** to edit the rule and list all its issues.

//

// More documentation: http://www.ndepend.com/docs/technical-debt

//</Description>

Installation and First Use

The first thing I did was to install the Visual Studio extension which was not the best move. My favorite add-in is ReSharper but the installation of NDepend broke ReSharper and caused by Visual Studio to become very unstable. After several install/uninstall rounds I figured out that I can disable the automatic analysis in favor of a request based analysis which made VS more stable but still had issues. With the newest version of NDepend, I was able to install the extension in Visual Studio 2017 and the extension did not cause any instability issues. This review is based on the standalone version which worked just fine for all the tests I ran.

For the purposes of this review, I will be using a project called SchemaZen found at https://github.com/sethreno/schemazen as it was a project for which I was working on and it has unit tests which I thought was important for the analysis. I needed to make a few changes to the project, I wanted a command timeout command line parameter and I wanted table, foreign keys and other table schemas to be in the same folder.

The process followed for this review:

- Pull the latest version from GitHub

- Load in VS2017, Full Rebuild

- NDepend – First Analysis

- Perform the code changes

- Rebuild

- NDepend – Second Analysis

First Analysis

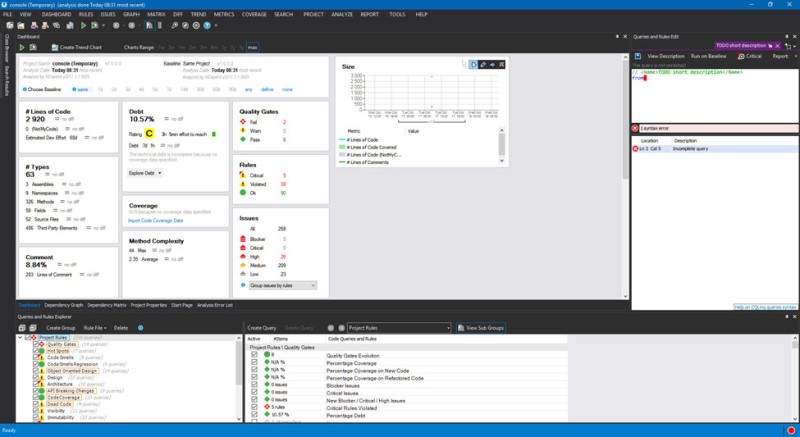

The information provided on the first analysis is pretty informative. Let’s break down some of the key points.

Code counts and diffs between versions (more on that in the second analysis).

The Debt, Coverage, Complexity and overall code quality section. This project is pretty good with a C rating but of course it could be better and the time to get to a B is just a few hours. The 258 issues is comprised of 26 high ranked items.

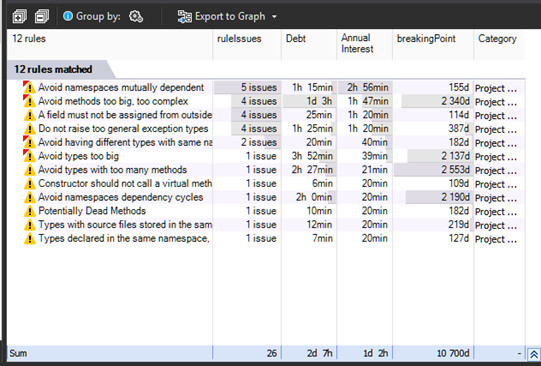

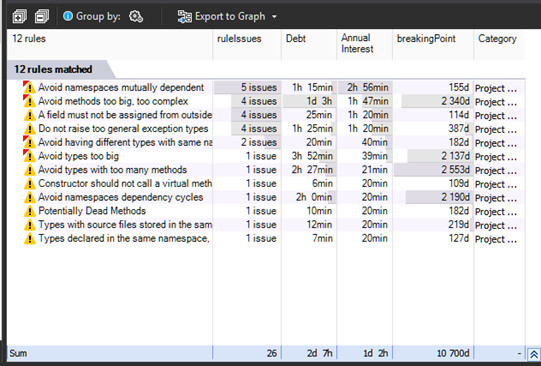

A click on the High Issues produces this list:

Long functions and classes are a part of this solution so I understand the list of issues.

Second Analysis

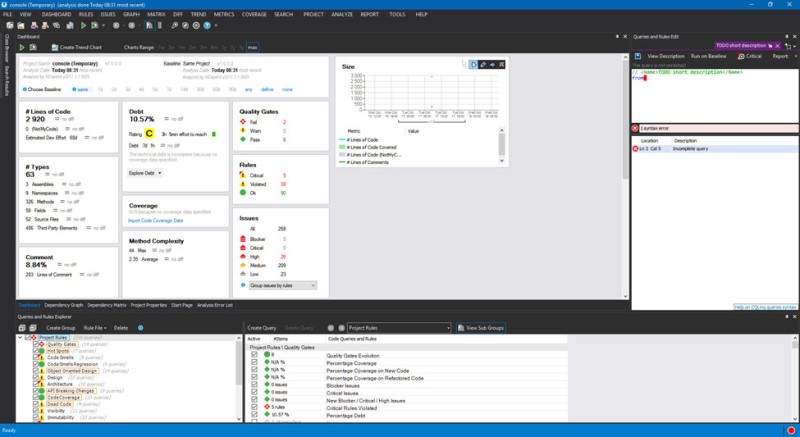

I added my code changes with the following result:

It appears my changes were good for functionality but bad for the long term stability of the application.

Observations

From a first use standpoint one might conclude that NDepend is just for simple information but the benefits are from long term use. The ability to see positive and negative changes over time help to make concrete plans to clean up the code where without such a tool the time spent might be a full time challenge. Incorporating NDepend in the build process would help the development staff understand how the smallest of changes affect the long-term stability of the application.

Architecture is a key factor in maintaining the application in the long term, the Dependency Matrix view helps show the areas where the code is used and how much.

Conclusion

The analysis provided by NDepend is excellent even for smaller projects. I found it most useful at first to use it on a smaller project until I better understood the interface and information provided. When I analyzed our huge internal application the results were overwhelming (over 2K types, 574K IL Instructions, etc.). This is where NDepend could help over time as that initial run would be used as the baseline for future analysis. The stand alone NDepend application is fast and churned through our application in about a minute. In our application, the dependency matrix was very useful in determining how to refactor the code to reduce direct coupling of classes and methods. From a code review perspective, NDepend can help get our team to focus on direct coupling more as well as object relationships. I still believe in code reviews and will still push to have the development team perform them as they are good learning tool as well as a good way to provide complete business benefit. NDepend is an excellent tool for wrangling the code into best practices and bringing to light potential long-term code issues without spending weeks or months of time manually analyzing the code base.